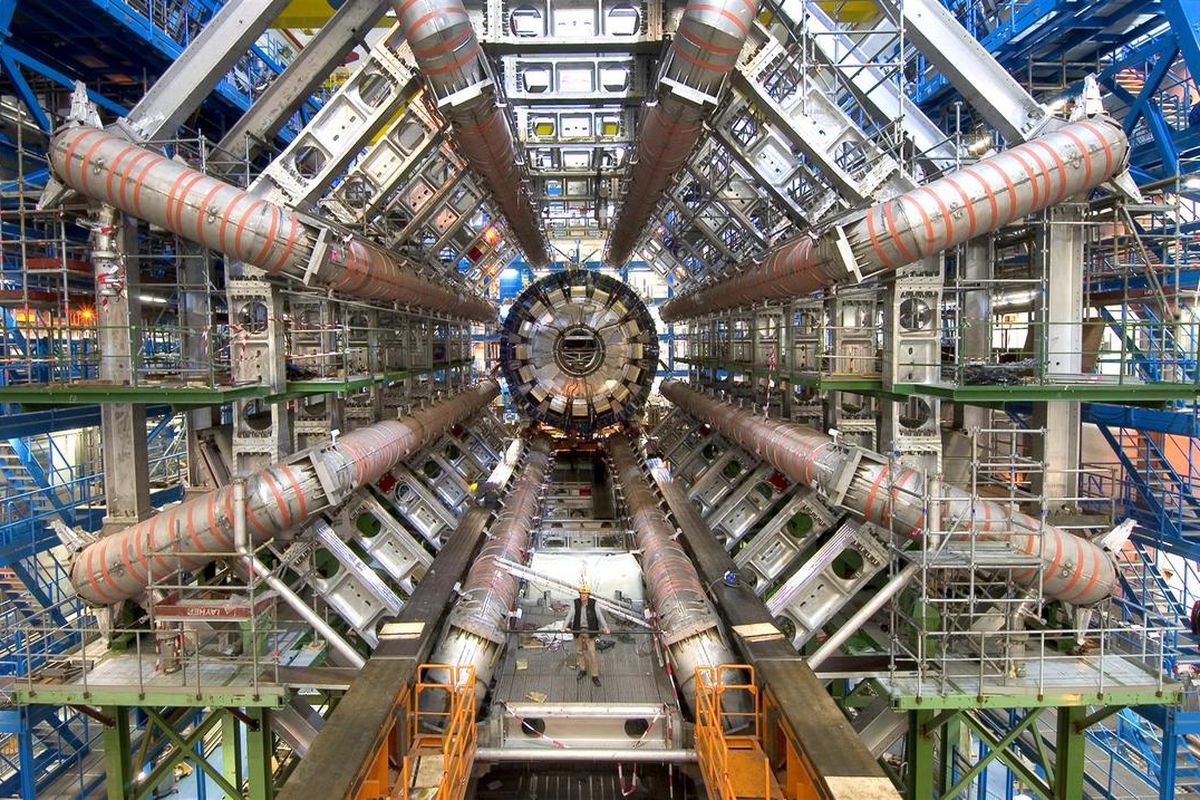

Analysing Vast Datasets – How CERN Do It

I remember a number of years ago being very excited by the prospect of working on a dataset containing 1m records. A recent dataset White Space analysed contained over 45m records. I therefore listened with interest on a recent tour of CERN, the nuclear research facility, to the details about how they approach analysis of vast datasets. How are they collecting and analysing data to crack the mysteries of the universe, and what could the commercial sector learn from this?

1. Think big

CERN and its Large Hadron Collider (LHC) detectors can record 10 million proton collisions every second. This generates a vast amount of data. By 2013, 100 petabytes had already been collected, with data being added at a rate of 25 petabytes per year. This is the equivalent of 21m DVDs worth of data by 2013, with 5m more added per year.

This vast data resource creates a fantastic analysis platform. Commercial organisations should take note of the scale of this ambition, and of the optimism which underpins it.

2. Turn a data mountain into a molehill

Collisions in the LHC produce too much data to record. To tackle this, they focus in on interesting physics through data selection. Algorithms spot the collisions and events that are considered interesting, with the rest discarded. Analysis can then focus on the most important data. In the first stage of selection, the number of events is filtered from 600m per second to 100,000 per second. A further stage of selection reduces this to only 100 – 200 events per second, a far more manageable volume of data.

This data selection process is highly relevant to the commercial sector. You don’t need to analyse ALL of the data. You just need a clever way of figuring out where best to look.

3. Find ways of making a big investment seem small

CERN’s experimentation and data collection costs big money. CERN and the LHC cost around $1bn a year to run. The UK contributes £100m per year. This is a lot of money in anyone’s book, especially for a theoretical science project which mainly aims to build knowledge rather than more tangible benefits. How on earth do you justify this, and get buy-in to it?

Data teams within commercial corporations will recognise this challenge. It can cost tens of millions of pounds to set up, fund and deploy an effective data resource. So, think about the investment a different way. It actually only costs a cup of coffee per person per year to fund CERN across its 21 member states. A bargain!

In summary, CERN shows that vast datasets can be generated, and that it’s possible to swim in a lake of data rather than drown. Their discovery of the Higgs boson in 2012 demonstrates this. If we’re being honest, commercial data teams are unlikely to discover anything quite so significant in their datasets. However, by taking a leaf out of CERN’s book, thinking big but analysing small, they may just find things that revolutionise their own growth and development within this universe.

How White Space Strategy can help

If you’re looking to generate more value from an abundance of data, need to bring disparate datasets together or are just starting out on your data-driven decision making journey, White Space Strategy can help.

Our toolkit includes Excel, SPSS, Alteryx and Tableau to analyse and visualise data. See how we helped British Red Cross triple their profits in 2 years by combining public data with their sales data, and how we futureproofed Haverstock Healthcare by building a tool to manage their workload more effectively.

If you’d like to discuss this with us further, we’d love to hear from you.

Blog author:

John Bee

Managing Director

Header Image: Maximilien Bern, CERN